Details

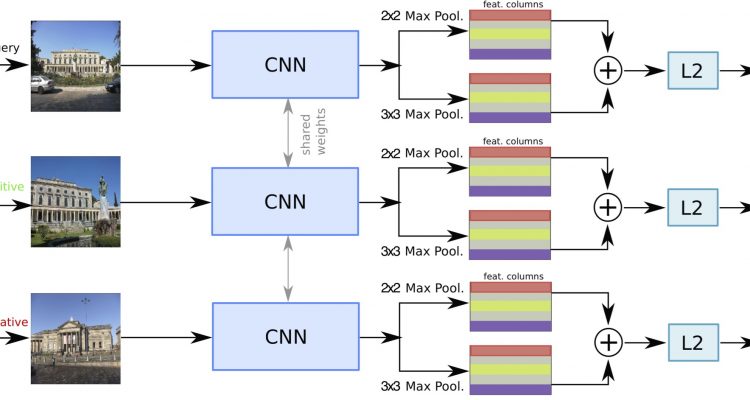

In the first part of the talk, Lamberto Ballan will show that images that are very difficult to recognize on their own may become more clear in the context of a neighborhood of related images with similar social-network metadata. This intuition is used to improve multi-label image annotation and show state-of-the-art results on the NUS-WIDE dataset. The model uses image metadata nonparametrically to generate neighborhoods of related images, then uses a deep neural network to blend visual information from the image and its neighbors.

In the second part of the talk, he’ll present his recent work on knowledge transfer for scene-specific motion prediction. When given a single frame of a video, humans can not only interpret the content of the scene, but also they are able to forecast the near future. This ability is mostly driven by their rich prior knowledge about the visual world, both in terms of the dynamics of moving agents, as well as the semantic of the scene. The interplay between these two key elements is exploited to predict scene-specific motion patterns on a novel large dataset collected from UAV on the Stanford campus.