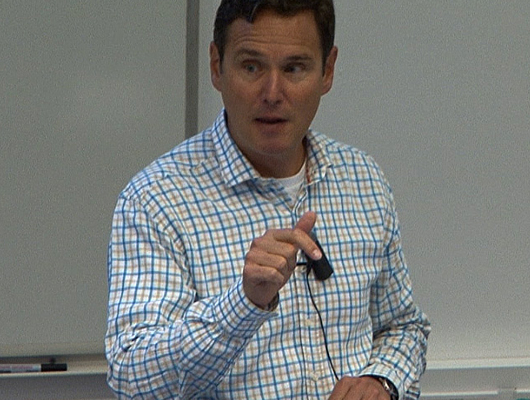

In this talk, Prof. Stan Scarloff of the Department of Computer Science at Boston University will describe works related to action recognition and localization in video.

Abstract: the Web images and video present a rich, diverse – and challenging – resource for learning and testing models of human actions.

In the first part of this talk, he will describe an unsupervised method for learning human action models. The approach is unsupervised in the sense that it requires no human intervention other than the action keywords to be used to form text queries to Web image and video search engines; thus, we can easily extend the vocabulary of actions, by simply making additional search engine queries.

Action! Improved Action Recognition and Localization in Video

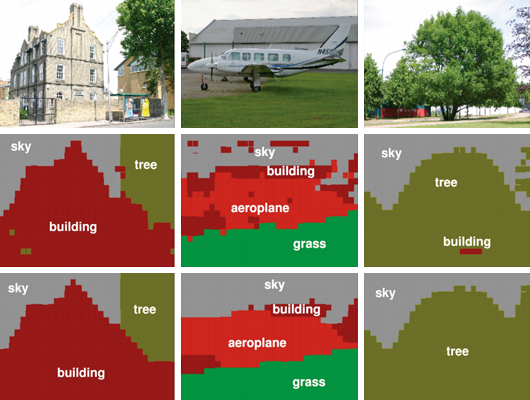

In the second part of this talk, Prof. Scarloff will describe a Multiple Instance Learning framework for exploiting properties of the scene, objects, and humans in video to gain improved action classification. In the third part of this talk, I will describe a new representation for action recognition and localization, Hierarchical Space-Time Segments, which is helpful in both recognition and localization of actions in video. An unsupervised method is proposed that can generate this representation from video, which extracts both static and non-static relevant space-time segments, and also preserves their hierarchical and temporal relationships.

This work was conducted in collaboration with Shugao Ma and Jianming Zhang (Boston U), and Nazli Ikizler Cinbis (Hacettepe University).

The work also appears in the following papers:

- “Web-based Classifiers for Human Action Recognition”, IEEE Trans. on Multimedia

- “Object, Scene and Actions: Combining Multiple Features for Human Action Recognition”, ECCV 2010

- “Action Recognition and Localization by Hierarchical Space-Time Segments”, ICCV 2013