Details

Autonomous vehicles are expected to drive in complex scenarios with several independent non cooperating agents. Path planning for safely navigating in such environments can not just rely on perceiving present location and motion of other agents. It requires instead to predict such variables in a far enough future. In this paper we address the problem of multimodal trajectory prediction exploiting a Memory Augmented Neural Network. Our method learns past and future trajectory embeddings using recurrent neural networks and exploits an associative external memory to store and retrieve such embeddings. Trajectory prediction is then performed by decoding in-memory future encodings conditioned with the observed past. We incorporate scene knowledge n the decoding state by learning a CNN on top of semantic scene maps. Memory growth is limited by learning a writing controller based on the predictive capability of existing embeddings. We show that our method is able to natively perform multi-modal trajectory prediction obtaining state-of-the art results on three datasets. Moreover, thanks to the non-parametric nature of the memory module, we show how once trained our system can continuously improve by ingesting novel patterns.

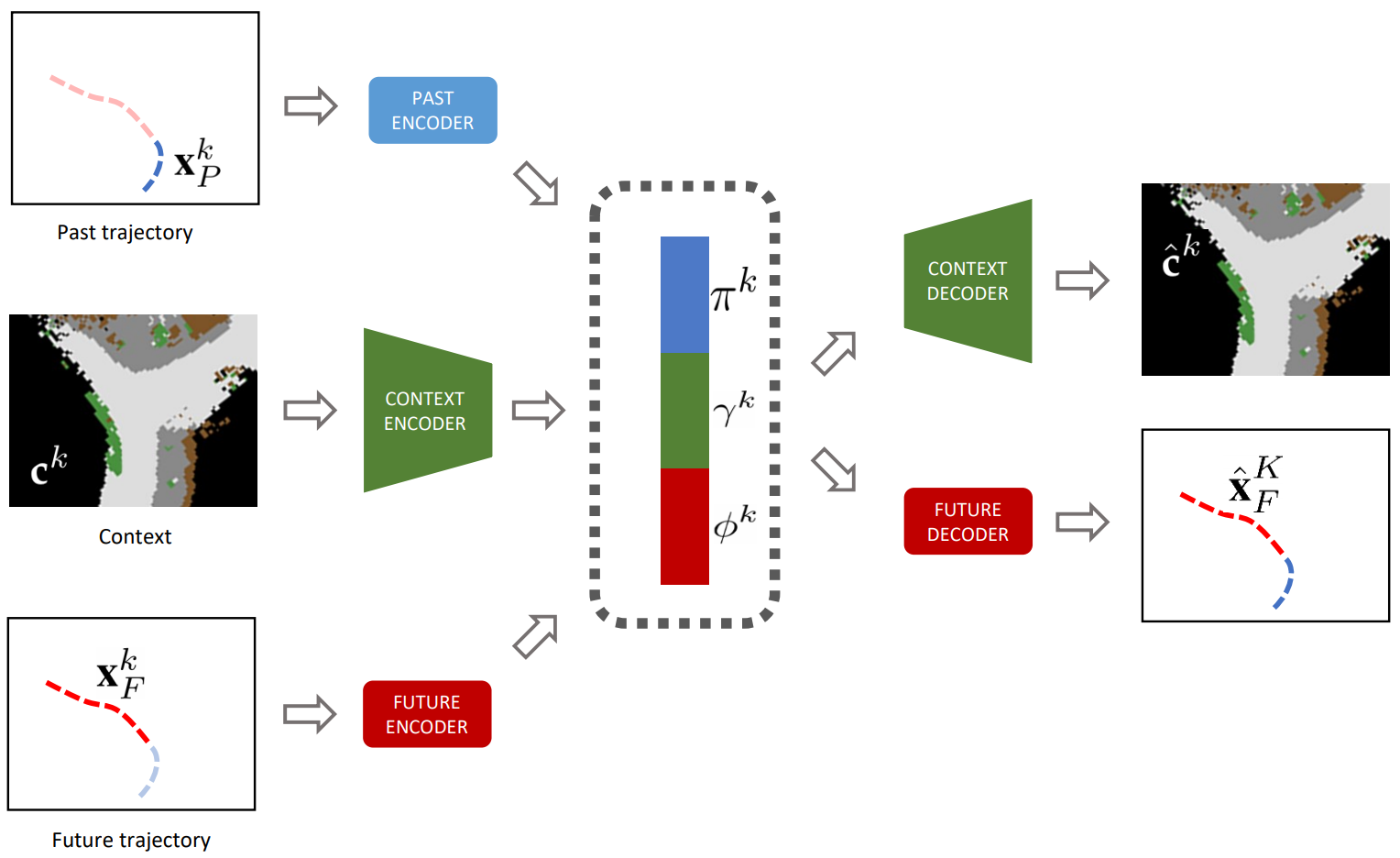

Feature representation

Embeddings of past and future trajectories are learned by training an autoencoder. Only the future is reconstructed, conditioning it with the past trajectory [F. Marchetti et al., CVPR 2020]. Optionally we can include context in the autoencoder [F. Marchetti et al., TPAMI 2020]. In this case, also the context is reconstructed with an additional decoder to facilitate the learning process.

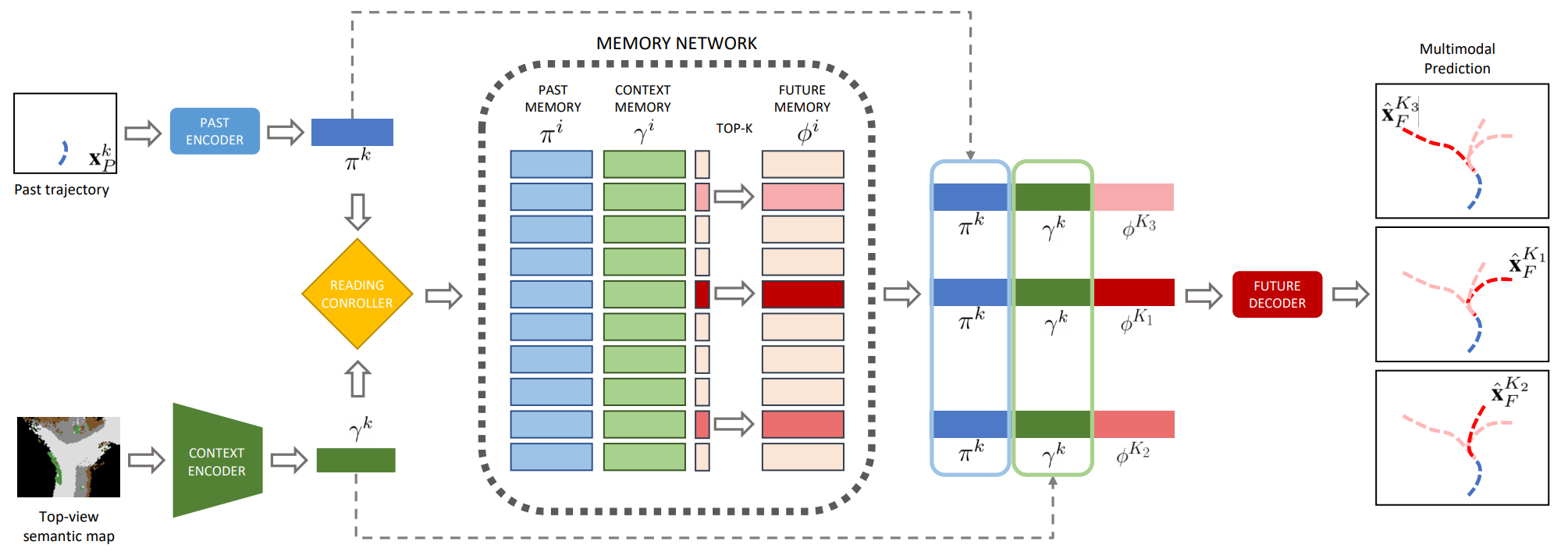

MANTRA

MANTRA stores in memory information about past trajectories but can also include representations of the surrounding contexts. When a trajectory is observed, its encoding is used as key to access memory and read the K most likely futures. The retrieved future encodings are combined with the representations of observed past and observed context and fed to the decoder. The final reconstruction is therefore guided by the future in memory but is conditioned by the observed past and context. Each feature read from memory generates a different prediction, yielding to a multimodal output.

At training time, a learned writing controller decides what to store in memory in order to retain only meaningful and non-redundant samples. At test time, a reading controller is used to read the best K samples.

Qualitative Results

Models that generate a single prediction, such as a Linear Regressor or a Kalman Filter, fail to address the multimodality of the task. In fact, these methods are trained to lower the error with a single output, even when there might be multiple equally likely desired outcomes. What may happen is that, in front of a bifurcation, the model predicts an average of the two possible trajectories, trying to satisfy both scenarios. Each prediction of MANTRA instead follows a specific path, ignoring the others.

Online Setting

Differently from prior work on trajectory prediction, MANTRA is able to improve its capabilities online by observing other agents while driving.

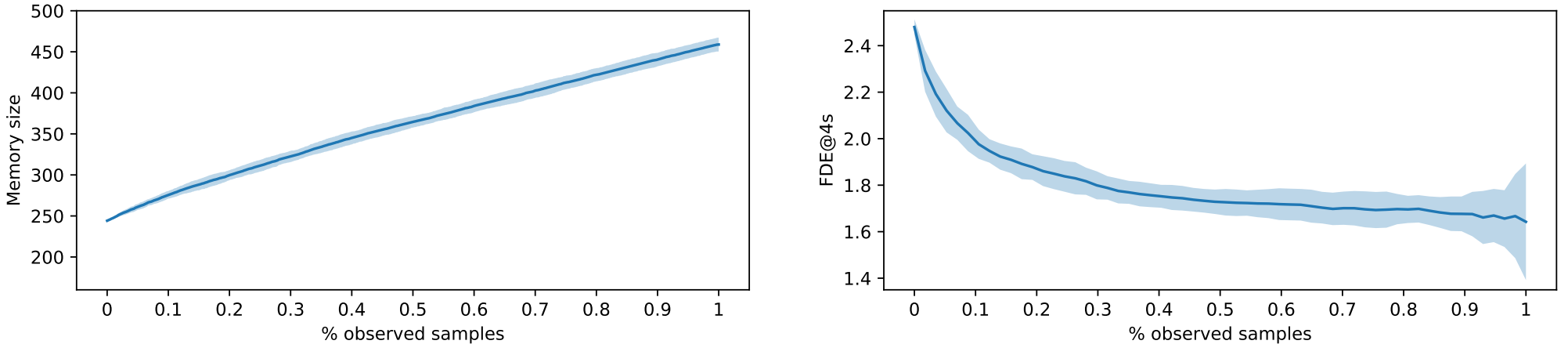

We simulate an online scenario on KITTI, iteratively removing N samples from the test set and feeding them to the memory writing controller without retraining the encoder. In this way, the controller can write in memory novel useful samples. After every new batch of samples, we evaluate the model on the remaining trajectories in the test set, until the test set has been completely observed.

We show the number of samples in memory and the Final Displacement Error (FDE@4s) as a function of the percentage of observed samples in the test set. Interestingly, memory size grows slowly while the error keeps decreasing.

Simulation in CARLA

We developed a demonstrator to showcase MANTRA in the CARLA simulator. While the self-driving car drives in urban streets, the model predicts future trajectories of surrounding agents, consistently with their past motion and the context.

MANTRA is trained offline with the CARLA-PRECOG dataset and is tested in the simulator. We use two different settings regarding memory:

- Predetermined memory: the memory is filled during training offline.

- Online memory: memory is filled online during the simulation using the writing controller.

In the demonstrator, it is possible to switch model, showcasing also baseline methods such as Kalman filter or Multi-Layer Perceptron. Baseline models are able to generate only single predictions, losing multimodality. Also, any additional model can be successively loaded into the simulation to verify the efficacy of custom trajectory predictors.

Common metrics such as Average/Final Displacement Error (ADE/FDE) are shown in real time during the simulation. This is particularly insightful for the online version of MANTRA, which gradually improves as the simulation advances. Similarly custom plots can be added to the interface, monitoring variables such as memory size.

Publications

Marchetti, F., Becattini, F., Seidenari, L., & Bimbo, A. D. (2020). Mantra: Memory augmented networks for multiple trajectory prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 7143-7152).

Marchetti, F., Becattini, F., Seidenari, L., & Del Bimbo, A. (2020). Multiple Trajectory Prediction of Moving Agents with Memory Augmented Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence.

Marchetti, F., Becattini, F., Seidenari, L., & Del Bimbo, A. (2020). Multi-Modal Trajectory Prediction in Carla Simulator. ECCV 2020 Demo.

Acknowledgments

This project was partially founded by IMRA Europe S.a.S.