The success of media sharing and social networks has led to the availability of extremely large quantities of images that are tagged by users. The need of methods to manage efficiently and effectively the combination of media and metadata poses significant challenges. In particular, automatic image annotation of social images has become an important research topic for the multimedia community.

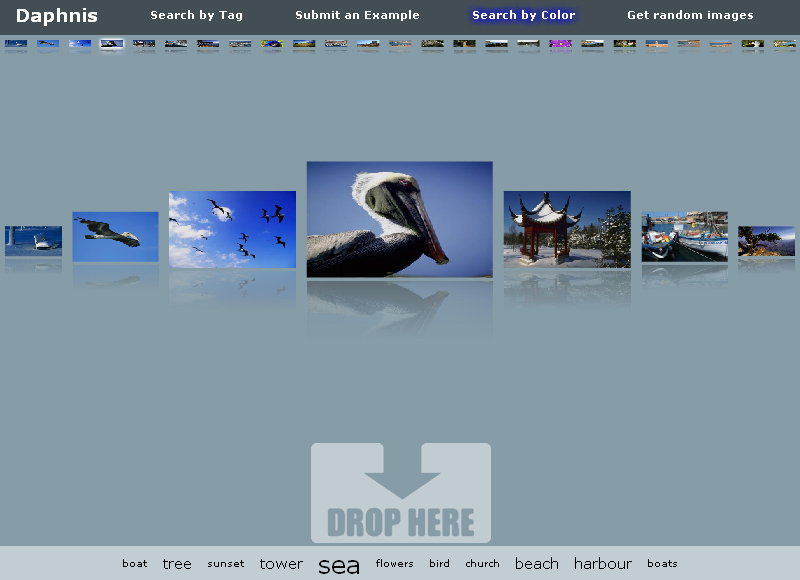

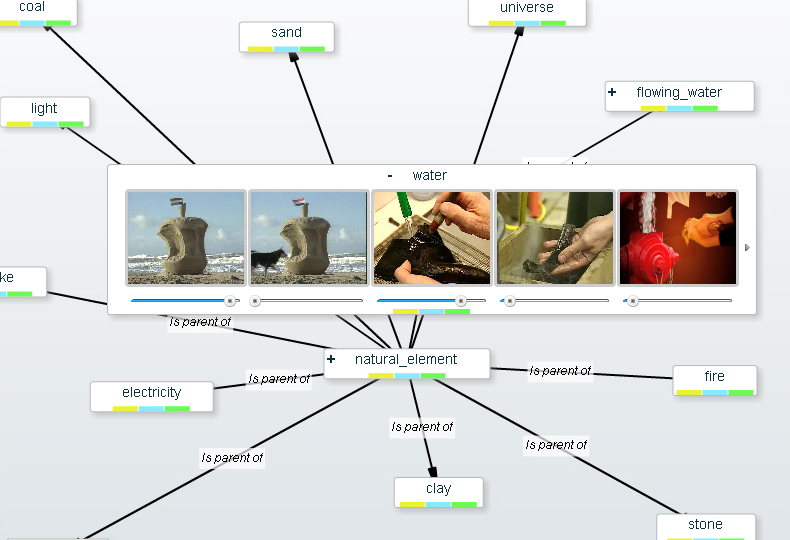

Detected tags in an image using Nearest-Neighbor Methods for Tag Refinement

We propose and thoroughly evaluate the use of nearest-neighbor methods for tag refinement. We performed extensive and rigorous evaluation using two standard large-scale datasets to show that the performance of these methods is comparable with that of more complex and computationally intensive approaches. Differently from these latter approaches, nearest-neighbor methods can be applied to ‘web-scale’ data.

Here we make available the code and the metadata for NUS-WIDE-240K.

- ICME13 Code (~ 8,5 GB, code + similarity matrices)

- Nuswide-240K dataset metadata (JSON format, about 25MB). A subset of 238,251 images from NUS-WIDE-270K that we retrieved from Flickr with users data. Note that NUS is now releasing the full image set subject to an agreement and disclaimer form.

If you use this data, please cite the paper as follows:

@InProceedings\{UBBD13,

author = "Uricchio, Tiberio and Ballan, Lamberto and Bertini,

Marco and Del Bimbo, Alberto",

title = "An evaluation of nearest-neighbor methods for tag refinement",

booktitle = "Proc. of IEEE International Conference on Multimedia \& Expo (ICME)",

month = "jul",

year = "2013",

address = "San Jose, CA, USA",

url = "http://www.micc.unifi.it/publications/2013/UBBD13"

}