Insights

My Travel Mate consists in an android Application that is composed of three interacting modules: i) the Location Module that provides current location and nearby points of interest; ii) the Content Provider that is responsible to fetch interest point textual information; iii) the Vision Module that constantly acquires the user view from the phone camera and compares it against a set of expected point of interest appearances.

Location Module: the location module is responsible of retrieving nearby points of interest to the application. It queries Google Places for a list of 20 interest points in a given radius, annotated with one or more type of interest point. The user can personalize the application to specify which type he is interested into (e.g. historical monuments) and exclude all the results containing unwanted types (e.g. cafes). In order to avoid finding results for interest points that share a common name but are located elsewhere, we explicitly specify the name of the current city in the query.

Content Provider: this module translates interest points into artwork descriptions. It queries Wikipedia for articles that contain both the point name and the city name, obtained by the Location module and localized in the local language. The first result is selected as best candidate and a second query to Wikipedia is performed to collect the page extract which is then used as artwork description. To provide translations into other languages the module also performs an additional query to Wikipedia, this time requesting the Interlanguage Links for the retrieved page.

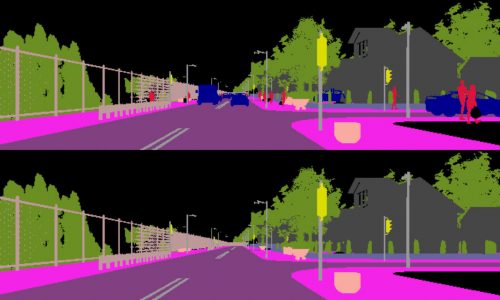

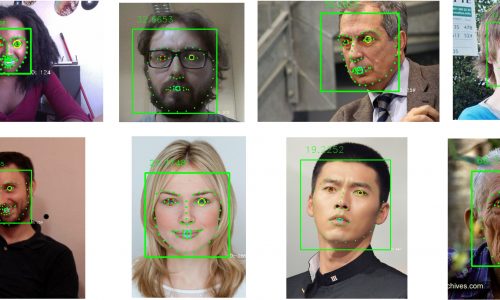

Vision Module: understanding when the user is actually facing a landmark is not a trivial task since user position and device orientation are not reliable information. To address this issue the system makes use of a computer vision algorithm that constantly observes the user perspective and matches it to the surrounding artworks provided by the Location Module. To determine if the user is facing one of the surrounding landmarks, the module queries Google Street Map. We retrieve a set of images taken considering the estimated point of view of the user. We index SIFT descriptors on multiple kd-trees. To reduce the burden of RANSAC geometric verification.

To provide an adequate user experience the system applies temporal smoothing to prevent erroneous detections and also incorporates a tracking strategy to provide only continuous output values.

The proposed system has been developed using a NVIDIA Jetson TK1 board, to test the performance of the vision system and then moved to an NVIDIA Shield Tablet K1 running Android OS.