Insights

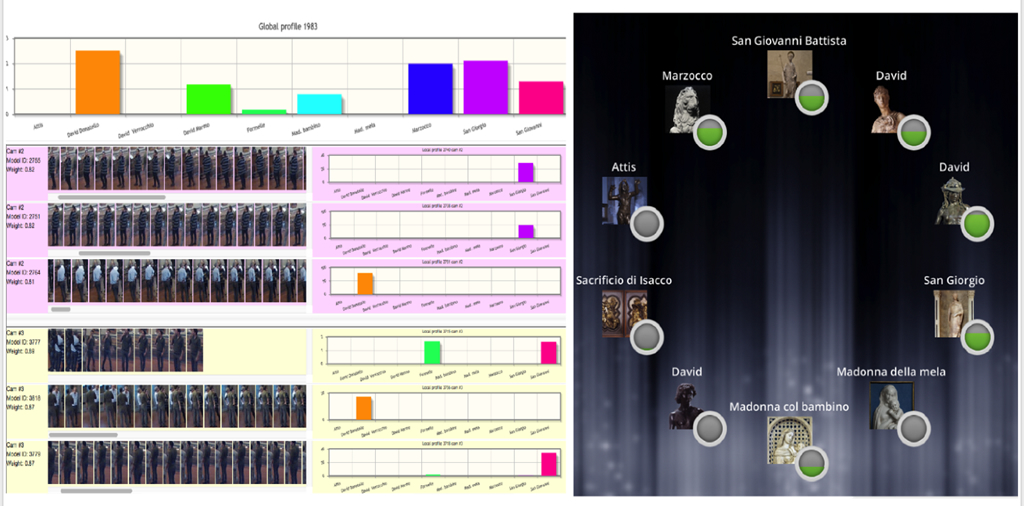

The MNEMOSYNE system grounds on state-of-the-art Detection and Re-identification technology to individuate persons in multiple camera views in the proximity of the artworks during a museum visit.

This allows to create visitor profiles and provides each visitor with a personalized bunch of information about the artworks that attracted her/him the most.

Detection exploits:

- a learned model of the geometry of the scene that provides a limited number of image windows where there is high probability to find a person and a classifier that assesses the presence of a person in the windows.

Re-identification exploits:

- an effective target representation that divides the person image into horizontal stripes in order to capture information about vertical color distribution and color correlation between adjacent stripes;

- a smart and highly performing Re-identification method to rank the detections properly.

The MNEMOSYNE system operates in real-time and provides personalized visitor’s information on a tabletop device at the exit of the Donatello hall in the Bargello National museum in Florence.

The MNEMOSYNE prototype uses two different solutions to provide recommendations to the users: a knowledge-based and an experience-based system. These modules have been developed as web servlets which expose the recommendation web services accessible via a Representational State Transfer (REST) interface.

The tabletop display is a large touchscreen device (55 inch display with Full HD resolution) and is placed horizontally on a customized table-shaped structure. When the passive profiling system detects a visitor approaching the table, it sends the interest profile to the user interface software which then exchanges data with the recommendation system, in order to load all the multimedia content that will be displayed for this user.

The metaphor proposed for the user interface is based on the idea of an hidden museum waiting to be unveiled, starting from the top (the physical artworks) and moving deeper towards additional resources such as explanations and relations between one artwork and others.

The proposed metaphor aims at hiding the complexity of the data extracted by the recommendation and passive profiling systems by letting users make more limited and simpler actions in deciding contents to consume and interact with.

The artworks level visualizes digital representations of the physical artworks for which the visitor has shown the highest level of interest, see figure 6a, based on the data created by the passive profiling system. A vertical animation starts when the user touches an artwork item, in order to move the point of view under the current space and reveal the level including the related resources to this artwork.

The related resources level represents a horizontally arranged space in which the visitor can navigate through the multimedia content related to the selected artwork. Related resources are organized in three different spaces (insights, recommendations and social), which can be explained as follows:

- insights: stories directly related to the artwork in the ontology;

- recommendations: resources related to the artwork and its related stories in the ontology according to the knowledge-based recommendation system;

- social: similar artworks according to the experience recommendation system using the visitor profile.