Insights

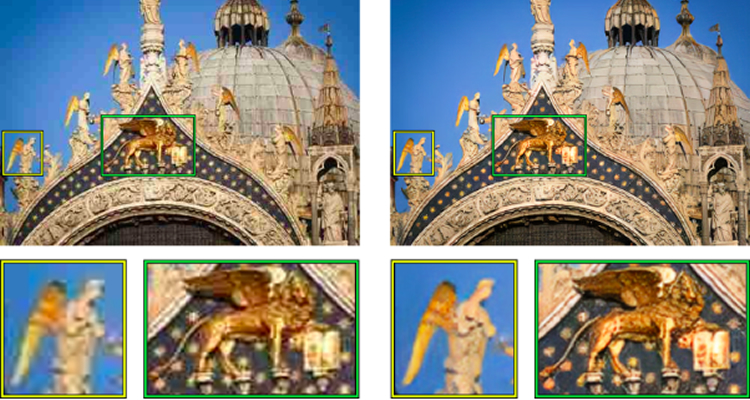

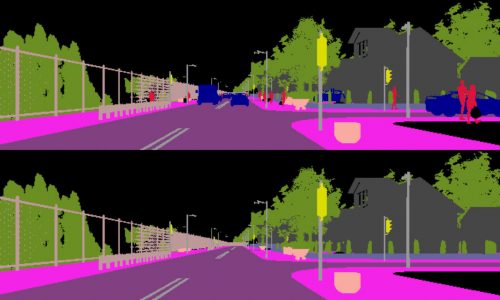

Image compression is a need that arises in many circumstances. Unfortunately, whenever a lossy compression algorithm is used, artifacts will manifest. Image artifacts, caused by compression tend to eliminate higher frequency details and in certain cases may add noise or small image structures. There are two main drawbacks of this phenomenon. First, images appear much less pleasant to the human eye. Second, computer vision algorithms such as object detectors may be hindered and their performance reduced. Removing such artifacts means recovering the original image from a perturbed version of it. This means that one ideally should invert the compression process through a complicated non-linear image transformation. We propose an image transformation approach based on a feed-forward fully convolutional residual network model. We show that this model can be optimized either traditionally, directly optimizing an image similarity loss (SSIM), or using a generative adversarial approach (GAN). Our GAN is able to produce images with more photorealistic details than SSIM based networks. We describe a novel training procedure based on sub-patches and devise a novel testing protocol to evaluate restored images quantitatively. We show that our approach can be used as a pre-processing step for different computer vision tasks in case images are degraded by compression to a point that state-of-the art algorithms fail. In this case, our GAN-based approach obtains better performance than MSE or SSIM trained networks. Differently from previously proposed approaches we are able to remove artifacts generated at any QF by inferring the image quality directly from data.