TANGerINE cities is a research project that investigates collaborative tangible applications. It was made within TANGerINE research project. This project is an ongoing research on TUIs (tangible user interfaces) combining previous experiences with natural vision-based gestural interaction on augmented surfaces and tabletops with the introduction of smart wireless objects and sensor fusion techniques.

TANGerINE Cities

Unlike passive recognized objects, common in mixed and augmented reality approaches, smart objects provide continuous data about their status through the embedded wireless sensors, while an external computer vision module tracks their position and orientation in space. Merging sensing data, the system is able to detect a richer language of gestures and manipulations both on the tabletop and in its surroundings, enabling for a more expressive interaction language across different scenarios.

Users are able to interact with the system and the objects in different contexts: the active presentation area (like the surface of the table) and the nearby area (around the table).

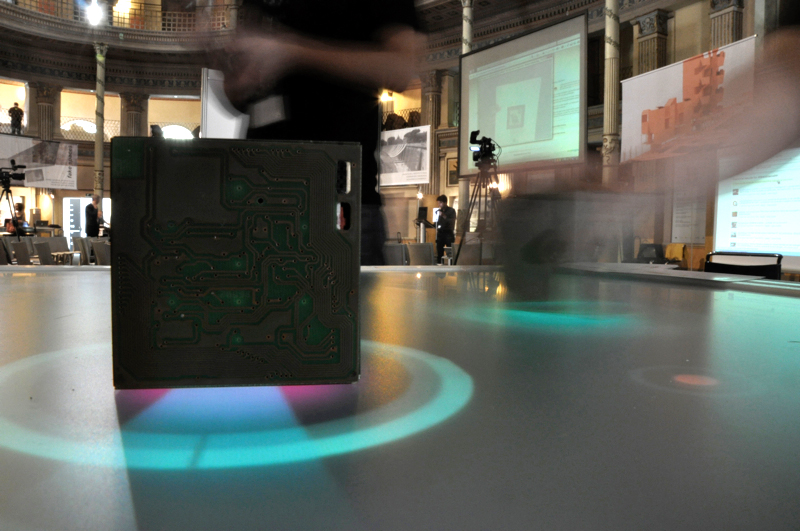

Presented at Frontiers of Interaction V (Rome, June 2009).

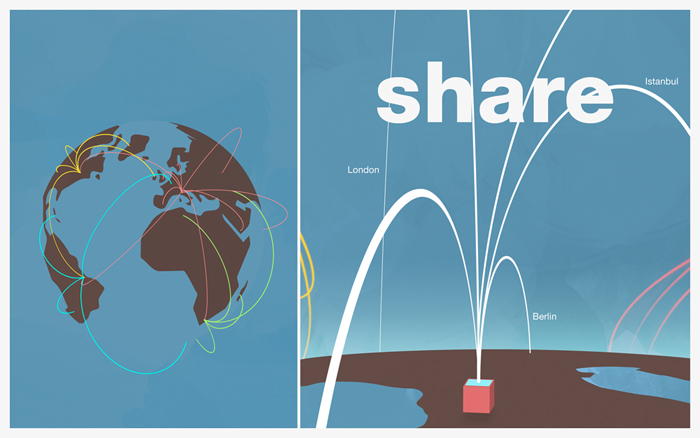

TANGerINE cities concept

TANGerINE cities let users choose and elaborate sounds characterizing today’s cities. TANGerINE cube collects sound fragments of the present and reassemble them in order to create a harmonic sounds for the future. TANGerINE cities is a mean of collective sound creation: a glimpse into the sound world of the future cities. TANGerINE cities imagines a future where technological development will have aided the reduction of metropolitan acoustic pollution, as transforming all noises into harmonic soundscape. The collaborative nature of TANGerINE table let users compare face-to-face their ideas as they forecast how noises of future cities will sound like. TANGerINE cities can use noises uploaded on the web by users who have recorded noises of their own sound worlds. Therefore TANGerINE platform provides a real tangible location within the virtual Social Networks.

TANGerINE cities concept