The VidiVideo project takes on the challenge of creating a substantially enhanced semantic access to video, implemented in a search engine. The outcome of the project is an audio-visual search engine, composed of two parts: an automatic annotation part, that runs off-line, where detectors for more than 1000 semantic concepts are collected in a thesaurus to process and automatically annotate the video and an interactive part that provides a video search engine for both technical and non-technical users.

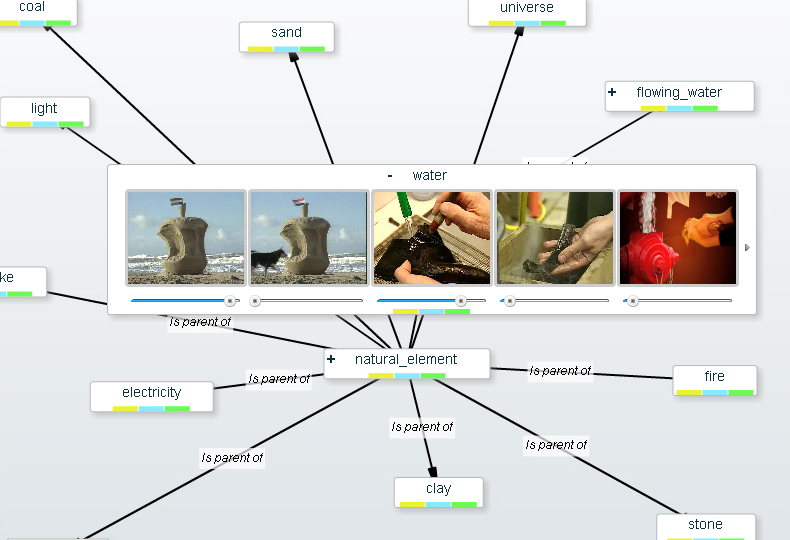

Andromeda - Vidivideo graph based video browsing

Video plays a key role in the news, cultural heritage documentaries and surveillance, and it is a natural form of communication for the Internet and mobile devices. The massive increase in digital audio-visual information poses high demands on advanced storage and search engines for consumers and professional archives.

Video search engines are the product of progress in many technologies: visual and audio analysis, machine learning techniques, as well as visualization and interaction. At present the state-of-the-art systems are able to annotate automatically only a limited set of semantic concepts, and the retrieval is allowed using only a keyword-based approach based on a lexicon.

The VidiVideo project takes on the challenge of creating a substantially enhanced semantic access to video, implemented in a search engine.

The outcome of the project is an audio-visual search engine, composed of two parts: a automatic annotation part, that runs off-line, where detectors for more than 1000 semantic concepts are collected in a thesaurus to process and automatically annotate the video and an interactive part that provides a video search engine for both technical and non-technical users.

The automatic annotation part of the system performs audio and video segmentation, speech recognition, speaker clustering and semantic concept detection.

The VidiVideo system has achieved the highest performance in the most important object and concept recognition international contests (PASCAL VOC and TRECVID).

The interactive part provides two applications: a desktop-based and a web-based search engines. The system permits different query modalities (free text, natural language, graphical composition of concepts using boolean and temporal relations and query by visual example) and visualizations, resulting in an advanced tool for retrieval and exploration of video archives for both technical and non-technical users in different application fields. In addition the use of ontologies (instead of simple keywords) permits to exploit semantic relations between concepts through reasoning, extending the user queries.

The off-line annotation part has been implemented in C++ on the Linux platform, and takes advantage of the low-cost processing power provided by GPUs on consumer graphics cards.

The web-based system is based on the Rich Internet Application paradigm, using a client side Flash virtual machine. RIAs can avoid the usual slow and synchronous loop for user interactions. This allows to implement a visual querying mechanism that exhibits a look and feel approaching that of a desktop environment, with the fast response that is expected by users. The search results are in RSS 2.0 XML format, while videos are streamed using the RTMP protocol.