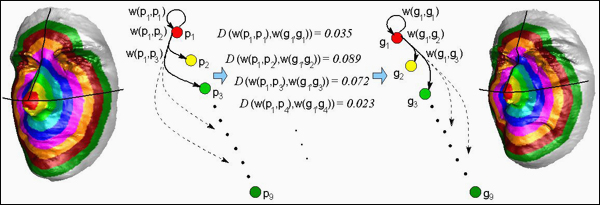

In this project, started in collaboration with the IRIS Computer Vision lab, University of Southern California, we address the problem of 2D/3D face recognition with a gallery containing 3D models of enrolled subjects and a probe set composed by only 2D imagery with pose variations. Raw 3D models are present in the gallery for each person, where each 3D model shows both a facial shape as a 3D mesh and a 2D component as a texture registered with the shape; by the other hand it is assumed to have only 2D images in the probe set.

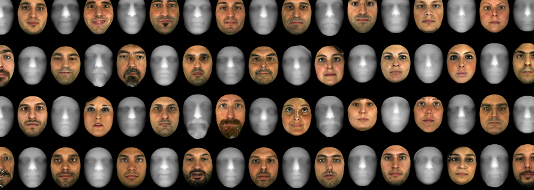

Facial shape as a 3D mesh and a 2D component as a texture registered with the shape

This scenario, defined as is, is an ill-posed problem considering the gap between the kind of information present in the gallery and the one available in the probe.

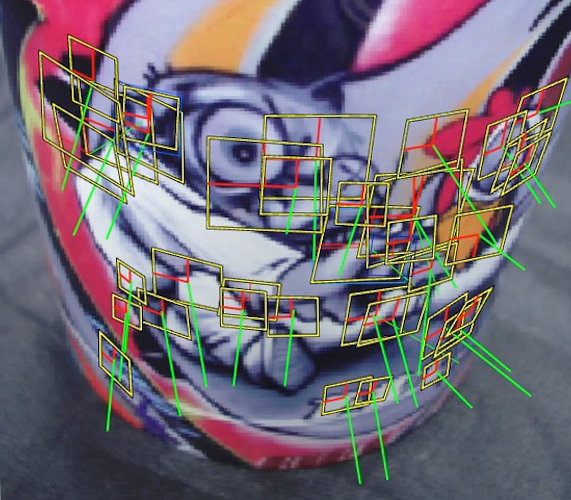

In experimental result we evaluate the reconstruction result about the 3D shape estimation from multiple 2D images and the face recognition pipeline implemented considering a range of facial poses in the probe set, up to ±45 degrees.

Future directions can be found by investigating a method that is able to fuse the 3D face modeling with the face recognition technique developed accounting for pose variations.

Results: baseline vs. our approach

This worked was conducted by Iacopo Masi during his internship in 2012/2013at the IRIS Computer Vision lab, University of Southern California.