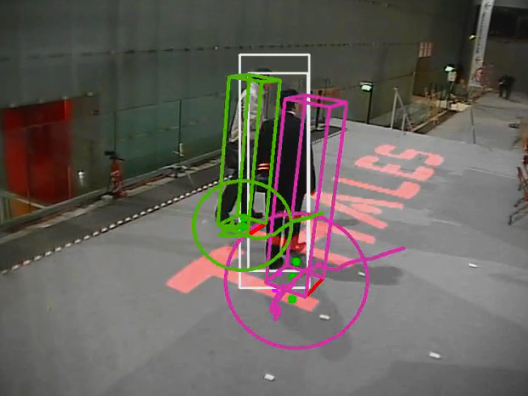

We propose a method for real time recovering from tracking failure in monocular localization and mapping with a Pan Tilt Zoom camera (PTZ). The method automatically detects and seamlessly recovers from tracking failure while preserving map integrity.

By extending recent advances in the PTZ localization and mapping, the system can quickly and continuously resume tracking failures by determining the best way to task two different localization modalities.

Continuous Recovery for Real Time Pan Tilt Zoom Localization and Mapping demo

The trade-off involved when choosing between the two modalities is captured by maximizing the information expected to be extracted from the scene map.

This is especially helpful in four main viewing condition: blurred frames, weak textured scene, not up to date map and occlusions due to sensor quantization or moving objects. Extensive tests show that the resulting system is able to recover from several different failures while zooming-in weak textured scene, all in real time.

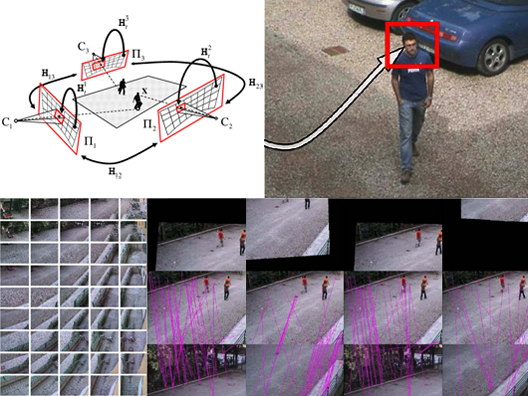

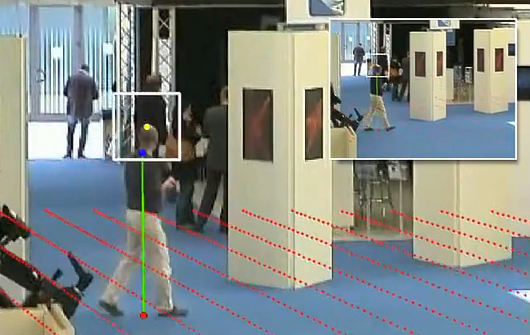

Dataset: we provide four sequences (Festival, Exhibition, Lab, Backyard) used for testing the recovery module for our AVSS 2011 publication, including the map, nearest neighbour keyframe of the map, calibration results (focal length and image to world homography) and finally a total of 2,376 annotated frames. The annotations are ground-truth feet position and head location, used to decide if the calibration is correct or not. Annotations are in term of MATLAB workspace files. Data was recorded using a PTZ Axis Q6032-E and a Sony SNC-RZ30 with a resolution of 320 x 240 pixel and a frame-rate of about 10 FPS. Dataset download.

Details:

- NN keyframe are described as a txt file where first number is the id of the frame and the next string is the id (filename of images in map dir) of the relative NN keyframe as #frame = keyframe id. Note that we store in the file only the frame number in which there is a keyframe switch.

- Calibration is provided as a CSV file using the following notation [#frames, h11,h12,h13,…., h31,h32 ,h33, focal length], where hij are the i-th row and j-th colum of homography.

- A MATLAB script is provided to superimpose ground-plane in the current image(plotGrid.m).

- The homograhy h11..h33 is the world to image homography that maps pixel into meters.

- Ground-Truth is under the name of “ground-truth.mat” and it consists of a cells where each item is the feet position and the head position.

- In each sequence it is present a main script plotGrid.m MATLAB script that plots ground-truth annotations and superimposes the ground-plane on the image. ScaleView.m is the script that exploits calibration to predict head location.

- Note that we have obfuscated most of the faces to keep anonymity.