TANGerINE Tales is a solution for multi-role digital storymaking based on the TANGerINE platform. The goal is to create a digital interactive system for children able to stimulate collaboration between users. The result concerns educational psychology in terms of respect of roles, development of literacy and of narrative skills.

Testing Tangerine Tales

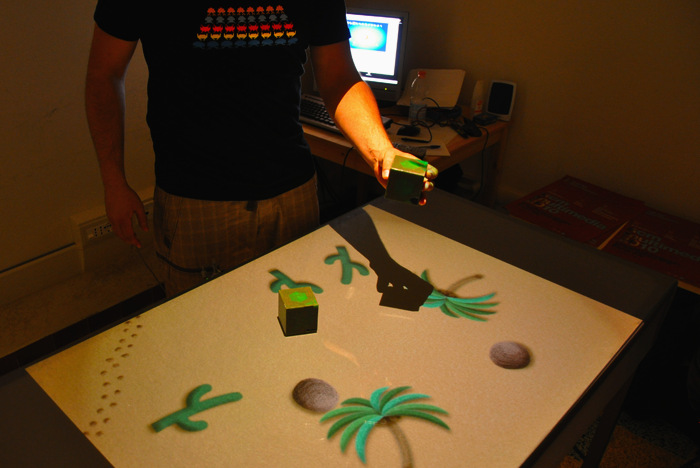

TANGerINE Tales lets children create and tell stories combining landscapes and characters chosen by themselves. Initially, children select the elements that will be part of the game and explore the environment within which they will create their own story. After that they have the chance to record their voice and the dynamics of the game. Finally, they are able to replay the self-made story on the interactive table.

The interaction between the system and users is performed through the tangible interface TANGerINE, consisting of two smart cubes (one for each child) and an interactive table. Users interact with the system through the manipulation of cubes that send data to the computer via a Bluetooth connection.

The main assumption is that the interaction takes place through the collaboration between two children who have different roles: one of them will actively interact to control the actions of the main character of the story, while the other will control the environmental events in response to the movements and actions of the character.

The target user of TANGerINE Tales is made up of 7-8 year olds, attending the third year of elementary school. This choice was made following research studies on psychological methods for collaborative learning, on Human Computer Interaction and tangible interfaces; we exploited the guidelines for learning supported by technological tools (computers, cell phones, tablet PCs, etc..) and those extrapolated by projects of storytelling for children.

You can see pictures of the interface on MICC Flickr account!