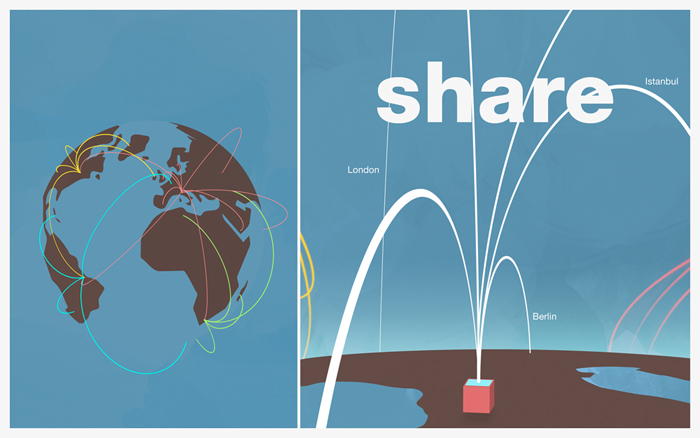

The RIMSI project, funded by Regione Toscana, includes study, experimentation and development of a protocol for the validation of procedures and implementation of a prototype multimedia software system to improve protocols and training in emergency medicine through the use of interactive simulation techniques.

Medical simulation software currently on the market can play very simple scenarios (one patient) and an equally limited number of actors involved (usually only one doctor and a nurse). In addition, “high-fidelity” simulation scenarios available are almost exclusively limited to the cardio-pulmonary resuscitation and emergency anesthesia. Finally, the user can impersonate a single role (doctor or nurse) while the other operator actions are controlled by the computer.

To overcome these important limitations of the programs currently available on the market, it is proposed the creation of a software capable of reproducing realistic scenarios (the inside of an emergency room, the scene of a car accident, etc. ..) with both single mode -user (the user controls the function of a single operator while the computer controls the other presonages) and multi-user (each user controls one of the actors in the scenario).

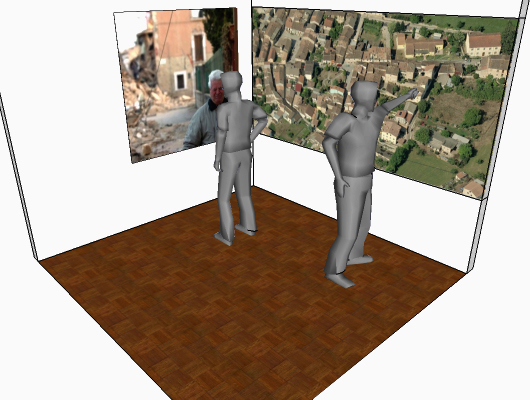

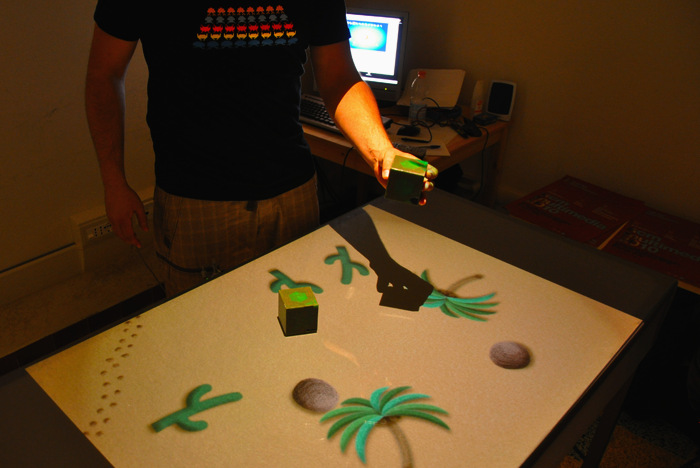

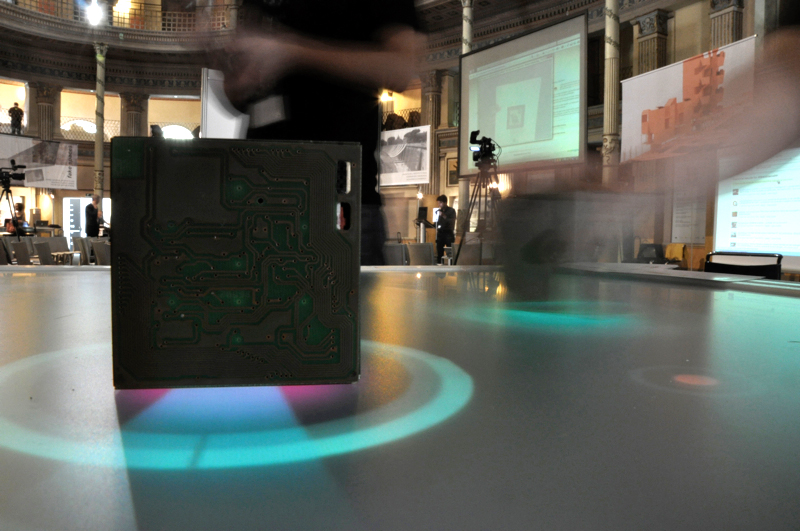

Our proposal is to develop a multi-user application that allows useres to interact both via mouse & keyboard and with body gestures. For this purpose we are currently developing a 3D trainig scenario in which learners would be able to interact through a Microsoft Kinect.

This work in progress will be presented during the Workshop on User Experience in e-Learning and Augmented Technologies in Education (UXeLATE) – ACM Multimedia, that will be held in Nara, Japan.