VIVIT is a three-years project led by Media Integration and Communication Center (MICC) and Accademia della Crusca, funded on government FIRB funding. As a part of this project, the VIVIT web portal has been developed by MICC in order to give visibility to culture-related contents that may appeal to second and third generation Italians living abroad.

The main aim of the VIVIT web portal is to provide people of italian origins with quality content related to the history of the nation and that of the language, together with learning materials for self-assessment and improvement of the viewer’s language proficiency.

The development of the VIVIT web portal has officially started in 2010, when the information architecture and content organization were first discussed. The VIVIT project stated that the web portal should give users and potential teachers ways to interact with each other and to produce and reorganize contents to be shown online to language and culture learners. Given these premises, it was decided to make use of a CMS (Content Management System), the possibility of user roles definition and interaction being part of its nature.

VIVIT is being developed on Drupal. Free and open-source PHP-based software, Drupal has come a long way over recent years in features development and is now considered one of the best CMS systems together with the well-known WordPress and Joomla. A large amount of user-contributed plugins (modules, in Drupal terms) and layout themes is available, since the development process itself is relatively simple and widely documented.

At this time, the architecture of the VIVIT portal is mostly complete: users may browse content, comment on it, bookmark pages and reorganize them from inside the platform (users with the role of teachers may also share these self-created content units with other users, to create their own learning path through the contents of the web portal); audio and video resources are available as well as learning materials that allow user interaction granted by the use of a custom jQuery plugin developed internally at MICC.

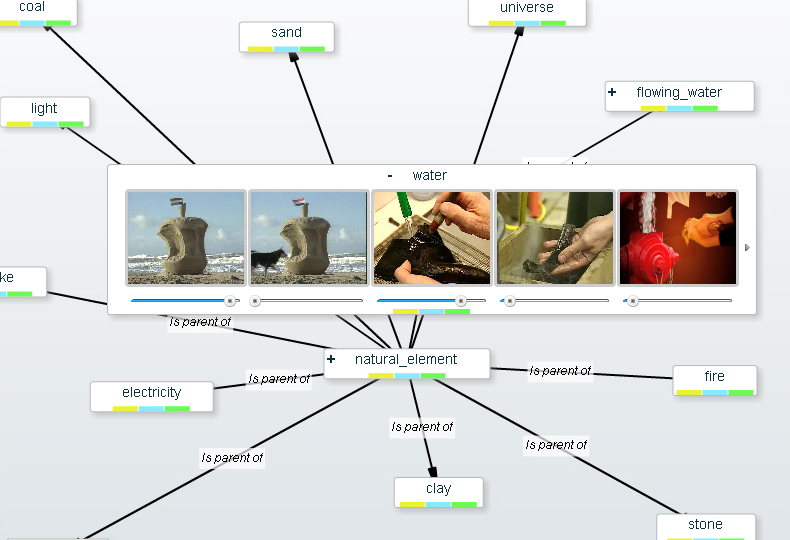

It is also possible, for users with enough rights, to semantically process and annotate (that is, assign resources that describe the content) texts inside the portal by using the named entities and topic extraction servlet Homer, also developed at MICC: the tagging possibility is part of Drupal core modules, while the text analysis feature is a combination of the contributed tagging module and a custom module written specifically for the VIVIT portal. The Homer servlet is a Java application based on GATE, a toolkit for a broad range of NLP (Natural Language Processing) tasks.

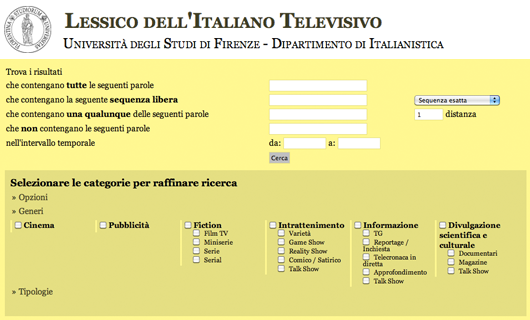

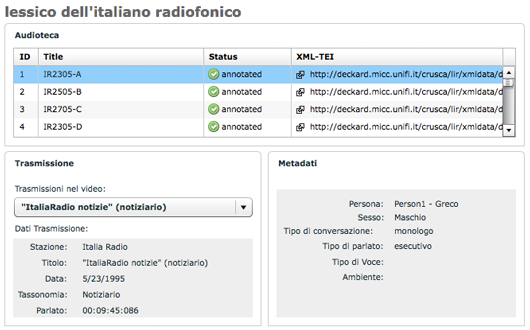

The VIVIT web portal gives access to additional resources related to the same cultural field: in particular LIT (Lexicon of Italian Television) and LIR (Lexicon of Italian Radio). The former, LIT, is a Java search engine that uses Lucene in order to index about 160 video excerpts from Italian TV programs of about 30 minutes each, chosen from the RAI video archive. LIT also offers a backend system where it is possible to stream the video sequences, synchronize the transcriptions with the audio-video sources, annotate the materials by means of customized taxonomies and furthermore add specific metadata. The latter, LIR, is a similar system that relies on an audio archive composed of radio segments from several Italian sources. Linguists are currently using LIT and LIR for computational linguistics based research.