MICC, Media Integration and Communication Center of the University of Florence, and Thales Italy have established a partnership to create a joint laboratory between university and company in order to research and develop innovative solutions per safety, sensitive sites, critical infrastructure and transport.

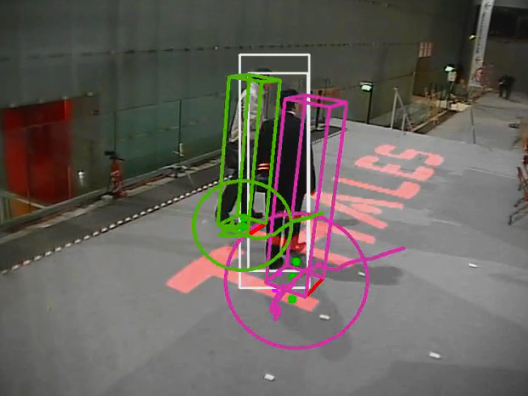

MICC - Thales joint lab demo at Thales Technoday 2011

In particular the technology program is mainly focused (but not limited) on surveillance through video analysis, employing computer vision and pattern recognition technologies.

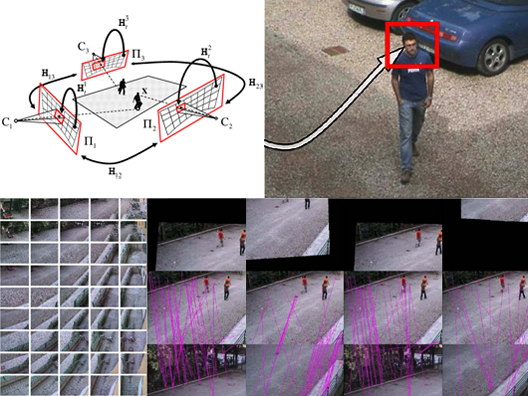

A current active filed of research, continued from 2009 to 2011 was that of studying how to increase the effectiveness of classic video surveillance systems using active sensors (Pan Tilt Zoom cameras) and obtain higher resolution images of tracked targets.

MICC - Thales joint lab projects

The collaboration allowed to start studying the inherent complexities of PTZ camera setting and algorithms for target tracking and was focused on the study and verification of a set of basic video analysis functionalities.

Thales

In 2011 the joint lab led to two important demos at two main events: Festival della Creatività, October 2010 in Florence (Italy) and Thales Technoday 2011 in January 2011 in Paris (France). In the latter the PTZ Tracker has been nominated as VIP Demo (Very ImPortant Demo).

Some videos about this events: