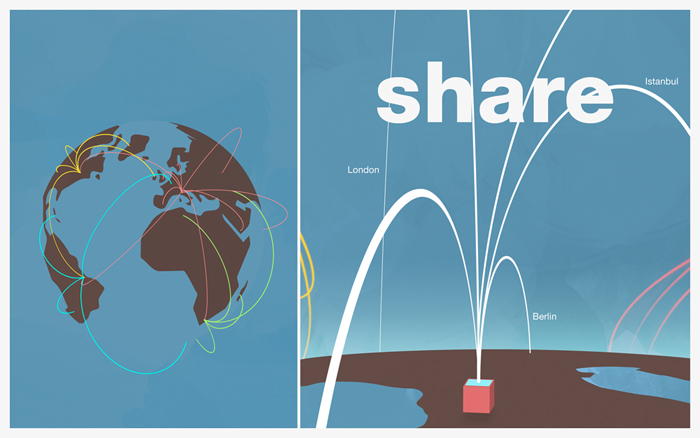

This project aims to realize a lightweight, flexible and extensible Cocoa Framework to create Multitouch and more in general Tangible apps. It implements the basic gestures recognition and offers the possibility for each user to define and setup its owns gestures easily. Because of its nature we hope this framework will work good with Quartz and Core Animation to realize fun and useful apps. It offers also a lot of off-the-shelf widgets, ready to quick realize your own NUI app.

CocoNUIT: Cocoa Natural User Interface & Tangible

The growing interest in multitouch technologies and moreover in tangible user interfaces has been pushed forward by the development of system libraries designed with the aim of make it easier to implement graphical NHCI interfaces. More and more different commercial frameworks are becoming available, and even the open source community is increasingly interested in this field. Many of these projects present similarities, each one with its own limits and strenghts: SparshUI, pyMT and Cocoa Multi-touch Framework are only some examples.

When it comes to the evaluation of a NHCI framework, there are several attributes that have to be taken into account. One of the major requirements is defined by the input device independence; immediately second comes the flexibility towards the underlying technology that makes possible to understandthe different kind of interaction, thus making the framework independent to variations of the computer vision engine. The results of the elaboration must then be displayed through a user interface which has to offer a high throughput of graphical performances in order to meet the requierements described for a NHCI environment.

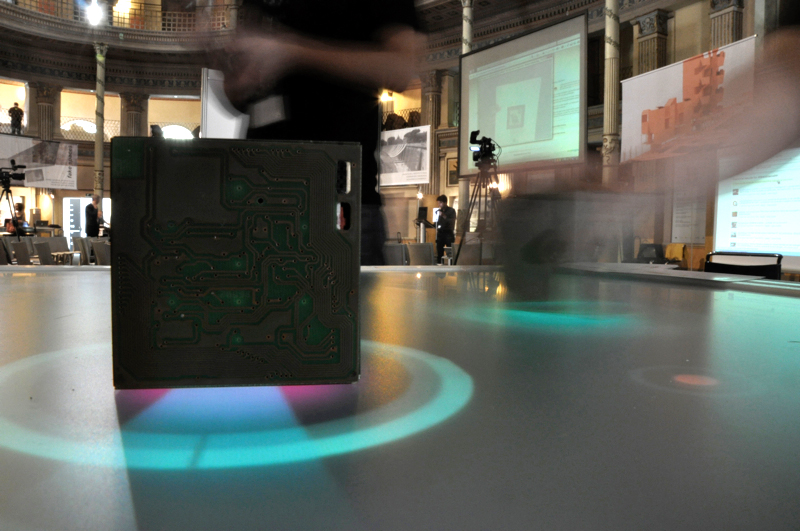

None of the available open source frameworks fully met the requirements defined for the project, thus leading to the development of a complete framework from scratch: CocoNUIT, the Cocoa Natural User Interface & Tangible. The framework is designed to be lightweight, flexible and extensible; based on Cocoa, the framework helps in the development of multitouch and tangible applications. It implements gesture recognition and let developers define and setup their own set of new gestures. The framework was built on top of the Cocoa technology in order to take advantage of Mac Os X accelerated graphical libraries for drawing and animation, such as Quartz 2D and CoreAnimation.

The CocoNUIT framework is divided in three basic modules:

- event management

- multitouch interface

- gesture recognition

From a high level point of view, the computer vision engine sends all the interaction events performed by users to the framework. These events, or messages, are then dispatched to each graphical object, or layer, present on the interface. Each layer can understand if the touch is related to itself simply evaluating if the touch position coordinates belong to the layer area: in this case the layer activates the recognition procedures and if a gesture gives a positive match, the view is updated accordingly.It is clear that such design takes into account the software modularity: it is in fact easy to replace or add new input devices, or to extend the gesture recognition engine simply adding new ad-hoc implemented gesture classes.