Prof. Arnold Smeulders, leader of the Intelligent Systems Lab Amsterdam, will hold a lecture at MICC “Computer Vision in 2015” on June 18 – aula 206, CDM, Viale Morgagni 40/44, Firenze at 11 AM.

Category Archives: Lectures

Combining Social Media with Sensor Data for Situation Awareness and Photography

With the spread of physical sensors and social sensors, we are living in a world of big sensor data. Though they generate heterogeneous data, they often provide complementary information. Combining these two types of sensors together enables sensor-enhanced social media analysis, which can lead to a better understanding of dynamically occurring situations.

Mohan Kankanhalli

We present two works that illustrate this general theme. In the first work, we utilize event related information detected from physical sensors to filter and then mine the geo-located social media data, to obtain high-level semantic information. Specifically, we apply a suite of visual concept detectors on video cameras to generate “camera tweets” and develop a novel multi-layer tweeting cameras framework. We fuse “camera tweets” and social media tweets via a “Concept Image” (Cmage).

Cmages are 2-D maps of concept signals, which serve as common data representation to facilitate event detection. We define a set of operators and analytic functions that can be applied on Cmages by the user not only to discover occurrences of events but also to analyze patterns of evolving situations. The feasibility and effectiveness of our framework is demonstrated with a large-scale dataset containing feeds from 150 CCTV cameras in New York City and Twitter data.

We also describe our preliminary “Tweeting Camera” prototype in which a smart camera can tweet semantic information through Twitter such that people can follow and get updated about events around the camera location. Our second work combines photography knowledge learned from social media with the camera sensors data to provide real-time photography assistance.

Professional photographers use their knowledge and exploit the current context to take high quality photographs. However, it is often challenging for an amateur user to do that. Social media and physical sensors provide us an opportunity to improve the photo-taking experience for such users. We have developed a photography model based on machine learning which is augmented with contextual information such as time, geo-location, environmental conditions and type of image, that influence the quality of photo capture.

The sensors available in a camera system are utilized to infer the current scene context. As scene composition and camera parameters play a vital role in the aesthetics of a captured image, our method addresses the problem of learning photographic composition and camera parameters. We also propose the idea of computing the photographic composition bases, eigenrules and baserules, to illustrate the proposed composition learning. Thus, the proposed system can be used to provide real-time feedback to the user regarding scene composition and camera parameters while the scene is being captured.

Large-Scale Person Re-Identification

In this talk Svebor Karaman introduce a proposal to Person re-identification. Person re-identification is usually defined as the task of retrieving images of one individual in a network of cameras given one or more gallery images.

Standard scenarios from the literature are analyzed and the limitations pointed out with regards to the real world application they attempt to model e.g. in a video surveillance context.

The author introduce a proposal that can leverage the large amount of detections that can be obtained in real world application thanks to our semi-supervised approach.

Building a Personalized Multimedia Museum Experience

In this talk Svebor Karaman has presented the MNEMOSYNE system that has been developed at the MICC to propose a personalized multimedia museum experience thanks to a passive profiling of the visitors.

The lecturer detailed all the components of the system that include system administration, databases, computer vision and natural interaction. The MNEMOSYNE system is installed at the Bargello Museum.

User Intentions in Multimedia Information Systems

In this talk Dr. Mathias Lux, Assistant Professor at the at the Institute for Information Technology (ITEC) at Klagenfurt University will investigate possibilities, challenges and opportunities for integrating user intentions into multimedia production, sharing, and retrieval.

Abstract: how to build better multimedia information systems? Management and organization of multimedia data has become easier thanks to the wide availability of metadata as well as advances in content-based image retrieval (CBIR); these advances, however, do not address what matters the most: the actual users of multimedia information systems. The goals and aims of users, i.e., their intentions, need to be put into focus in some creative way.

Action! Improved Action Recognition and Localization in Video

In this talk, Prof. Stan Scarloff of the Department of Computer Science at Boston University will describe works related to action recognition and localization in video.

Abstract: the Web images and video present a rich, diverse – and challenging – resource for learning and testing models of human actions.

In the first part of this talk, he will describe an unsupervised method for learning human action models. The approach is unsupervised in the sense that it requires no human intervention other than the action keywords to be used to form text queries to Web image and video search engines; thus, we can easily extend the vocabulary of actions, by simply making additional search engine queries.

Action! Improved Action Recognition and Localization in Video

In the second part of this talk, Prof. Scarloff will describe a Multiple Instance Learning framework for exploiting properties of the scene, objects, and humans in video to gain improved action classification. In the third part of this talk, I will describe a new representation for action recognition and localization, Hierarchical Space-Time Segments, which is helpful in both recognition and localization of actions in video. An unsupervised method is proposed that can generate this representation from video, which extracts both static and non-static relevant space-time segments, and also preserves their hierarchical and temporal relationships.

This work was conducted in collaboration with Shugao Ma and Jianming Zhang (Boston U), and Nazli Ikizler Cinbis (Hacettepe University).

The work also appears in the following papers:

- “Web-based Classifiers for Human Action Recognition”, IEEE Trans. on Multimedia

- “Object, Scene and Actions: Combining Multiple Features for Human Action Recognition”, ECCV 2010

- “Action Recognition and Localization by Hierarchical Space-Time Segments”, ICCV 2013

Objects and Poses

In this talk, Prof. Stan Scarloff of the Department of Computer Science at Boston University will describe works related object recognition and pose inference.

In the first part of the talk, he will describe a machine learning formulation for object detection that is based on set representations of the contextual elements. Directly training classification models on sets of unordered items, where each set can have varying cardinality can be difficult; to overcome this problem, he proposes SetBoost, a discriminative learning algorithm for building set classifiers.

In the second part of the talk, he will describe efficient optimal methods for inference of the pose of objects in images, given a loopy graph model for the object structure and appearance. In one approach, linearly augmented tree models are proposed that enable efficient scale and rotation invariant matching.

In another approach, articulated pose estimation with loopy graph models is made efficient via a branch-and-bound strategy for finding the globally optimal human body pose.

This work was conducted in collaboration with Hao Jiang (Boston College), Tai-Peng Tian (GE Research Labs), Kun He (Boston U) and Ramazan Gokberk Cinbis (INRIA Grenoble), and appears in the following papers: “Fast Globally Optimal 2D Human Detection with Loopy Graph Models” in CVPR 2010 (pdf download), “Scale Resilient, Rotation Invariant Articulated Object Matching” in CVPR 2012 (pdf download), “Contextual Object Detection using Set-based Classification” in ECCV 2012 (pdf download).

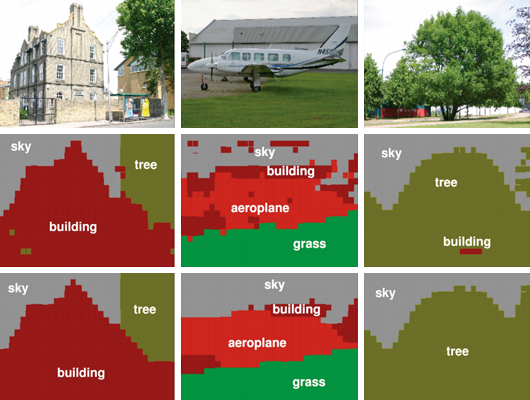

Segmentation Driven Object Detection with Fisher Vectors

Jakob Verbeek of Lear Team at INRIA, Grenoble, France will present an object detection system based on the powerful Fisher vector (FV) image representation in combination with spatial pyramids computed over SIFT descriptors.

To alleviate the memory requirements of the high dimensional FV representation, we exploit a recent segmentation-based method to generate class-independent object detection hypotheses, in combination with data compression techniques. Our main contribution, however, is a method to produce tentative object segmentation masks to suppress background clutter. Re-weighting the local image features based on these masks is shown to improve object detection significantly. To further improve the detector performance, we additionally compute these representations over local color descriptors, and include a contextual feature in the form of a full-image FV descriptor.

In the experimental evaluation based on the VOC 2007 and 2010 datasets, we observe excellent detection performance for our method. It performs better or comparable to many recent state-of-the-art detectors, including ones that use more sophisticated forms of inter-class contextual cues.

Additionally, including a basic form of inter-category context leads, to the best of our knowledge, to the best detection results reported to date on these datasets.

This work will be published in a forthcoming ICCV 2013 paper.

Searching with Solr

Eng. Giuseppe Becchi, Wikido events portal founder, will hold a technical seminar about SOLR on Monday 2013 July 8 at Media Integration and Communication Center entitled “Searching with Solr”.

Abstract: What is Solr and how it came about.

- What is Solr and how it came about.

- Why Solr? Reliable, Fast, Supported, Open Source, Tunable Scoring, Lucene based.

- Installing & Bringing Up Solr in 10 minutes.

Solr is the popular, blazing fast open source enterprise search platform from the Apache Lucene project. Its major features include powerful full-text search, hit highlighting, faceted search, near real-time indexing, dynamic clustering, database integration, rich document (e.g., Word, PDF) handling, and geospatial search.

During the meeting, we’ll see how to: install and deploy solr (also under tomcat)

- configure the two xml config files: schema.xml and solrconfig.xml

- connect solr to different datasource: xml, mysql, pdf (using apach tika)

- configure a tokenizer for different languages

- configure result scoring and perform a faceted and a geospacial search.

- configure and perform a “morelikethis” search

Material: it is recommended to participate bringing a PC with the Java 1.6 or greater and Tomcat installed. The tutorial file that will be used during the seminar can be downloaded at http://it.apache.contactlab.it/lucene/solr/4.3.1/ (the solr-4.3.1.tgz file)

Introduction to Oblong Industries’ Greenhouse SDK

Eng. Alessandro Valli, Software Engineer at Oblong Industries, will hold a technical seminar about Greenhouse SDK on Friday 2013 June 21 at Media Integration and Communication Center.

Greenhouse is a toolkit to create interactive spaces: multi-screen, multi-user, multi-device, multi-machine interfaces leveraging gestural and spatial interaction. Working knowledge of C++ and OpenGL is recommended but not required. Greenhouse is available now for Mac OS X and Linux (soon) and is free for non-commercial use.