The project’s goal is to develop a reliable face detector and tracker for indoor video surveillance. The problem that we have been asked to deal with is to provide good quality face images of people entering restricted areas. Those images are going to be used for face recognition, and a feedback will be provided from the face recognition system to state if the person has been recognized or not. The nature of the problem makes it very important to keep tracking the person until he is visible on the image plane, even if he is already been recognized. This is needed to prevent the system from providing repeated, multiple alarms from the same person.

Optimal face detection and tracking

In other words, what we aim to obtain is:

- a reliable detector that could be used to start the tracker: the detector must be sensitive in order to be able to start the tracker as soon as possible when an intruder enters the supervised environment;

- an efficient and robust tracker to be able to track the intruder without losing him until he leaves the supervised environment: as stated before, it is important to avoid repeated, multiple alarms to be generated from the same track, both for computational cost reduction and false – positives reduction;

- a fast and reliable face detector to extract face images from the tracked person: the face detector must be reliable on order to provide ‘good’ face images from the target; what “good” stands for depends on the face recognition system, but usually this means that the image has to be at highest achievable resolution and well focused, and that the face has to be as frontal as possible;

- a method to assess if the tracker has lost the target or is tracking good (a ‘stop criteria’): it is important to be able to detect situations in which the tracker has lost the target, because in such a situation some special action could be required.

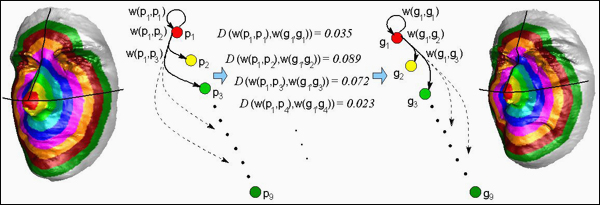

At this time, we use a face detector based on the Viola-Jones algorithm to initialize a particle filter-based tracker that uses an histogram-based appearance model. The particle filter accuracy is greatly improved thanks to strong measures provided by the face detector.

To provide a reasonably small number of face images to the face recognition system, a method to evaluate the quality of the captured images is needed. We keep into account image resolution and symmetry in order to store only those images that give increasing quality for each detected person.

Below are reported a few sample videos with the face sequences grabbed from each of them. The faces are ordered by the system according to their quality (increasing from left to right).

Upon face tracking, it is really easy to build a face obfuscation application, though the requirements it needs may be in slight contrast with that needed for face logging. The following video shows an example: